Students starting in 2014

Faraday wave-droplet dynamics: a hydrodynamic quantum analogue, Matt Durey

Supervisor: Paul Milewski

It has been observed on a microscopic scale that when a small fluid droplet is dropped onto a vertically vibrating fluid surface, it will `walk' across the surface of the bath. The droplet-Faraday pilot wave pair's behaviour is now reminiscent of quantum physics; there is a particle-wave duality where the fluid droplet can undergo similar processes to a particle in the quantum world. On an unbounded domain, pairs of droplets can interact, deflect or capture each other, depending on various parameters. The quantum single-particle double-slit experiment can be reproduced for fluid droplets, with the interactions between wave field and slits causing a diffraction probability distribution for droplet positions to be produced. This phenomenon is the basis for two lines of research that is being explored by Matt: (i) The fluid dynamics of droplet-Faraday pilot wave reflection properties at planar boundaries. (ii) The long time stationary behaviour of models for droplet-Faraday pilot wave dynamics in confined domains.

Students starting in 2015

High-order DG methods for atmospheric modelling, Jack Betteridge

Supervisors: Eike Müller and Ivan Graham

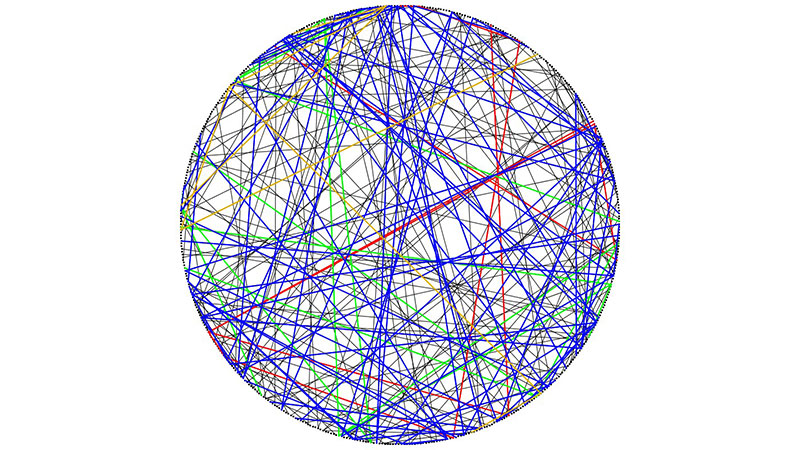

One technique for solving partial differential equations numerically is by using the Discontinuous Galerkin (DG) method. This method has high spatial locality, which improves the parallel scalability and can take greater advantage of modern (many core) high performance computing architectures. A hybrid multigrid approach has already been successfully used for elliptic PDEs arising from subsurface flow. Similar methods can also be applied to atmospheric modelling problems, for instance solving the Navier-Stokes equations in a thin spherical shell. Over the course of the project, Jack is looking at the computational and algorithmic aspects of implementing a solver for these atmospheric models and the various different pre-conditioners to speed up the solution.

Students starting in 2016

Spatial confounding, Emiko Dupont

Supervisor: Nicole Augustin

Spatial confounding is a problem that often occurs in environmental, ecological and epidemiological applications of spatial statistics. Models for spatial data usually include a fixed effect for the explanatory variable of interest as well as a random effect capturing spatial correlation in the data. Although the inclusion of a spatial random effect generally improves the goodness of fit of the model, it can also introduce bias in the estimated fixed effect due to co-linearity of the fixed and random effects, which could lead to incorrect statistical inference. This is called spatial confounding and is a general problem that is not restricted to any specific type of statistical model. Emiko’s project is about gaining a better understanding of spatial confounding, using both real and simulated data to investigate when the problem occurs and what can be done to avoid it. She is considering both parametric and non-parametric spatial models.

Students starting in 2017

Systemic sclerosis: including prevalent and incident exposures in order to evaluate effects on cancer risk, Eleanor Barry

Supervisors: Anita McGrogan and Jonathan Bartlett

Systemic sclerosis (SSc), or scleroderma, is a long-term condition that causes thickening and hardening of the skin due to a build-up of collagen. SSc can also affect internal organs such as the kidneys, heart, lungs and gastrointestinal tract. It is believed that there is a possible link between SSc and other serious health conditions, and Barry's PhD explores the association between SSc and the occurrence of serious outcomes compared to people who do not have SSc. Working with the Department of Pharmacy and Pharmacology, she is focusing on statistical techniques used to minimise errors when estimating effects of SSc on occurrence of cancer.

Students starting in 2018

Adaptive Semi-implicit Semi-Lagrangian (SISL) method for the Shallow Water System, Simone Appella

Supervisor: Chris Budd

Numerical Weather prediction is an essential component to weather forecasting and climate modelling. It is based on the design of accurate and efficient numerical schemes to simulate the motion of ocean and atmosphere. In such context, explicit numerical methods have to satisfy the CFL condition, which imposes a strict time step restriction, in order to be stable. To overcome this limit, the Met Office is currently adopting the Semi-Implicit, Semi-Lagrangian method (SISL), which permits the use of larger time steps without stability issues. However, many global meteorological phenomena of relevance (storms, tsunami) occur on a scale smaller than 25km, that cannot be efficiently resolved by SISL with an uniform grid. A natural way to fix this is to cluster the mesh points in proximity of small features evolving in time. Such adaptive methods, though, are inefficient to use because are either unstable or require small time steps. This issue can be avoided by coupling them with a SISL method. Simone will investigate the adaptive SISL scheme applied on the Shallow Water system, that models the shallow atmosphere. He will start to examine the accuracy and stability of this method in the 1D case. This will be then extended to 2/3 dimensions based on the optimal transport moving mesh strategy.

Students starting in 2019

Mathematical and statistical analysis of time series data to quantify trends and events in ocean noise, Gianluca Audone

Supervisors: Matt Nunes and Philippe Blondel

Variation in the ambient sound levels in the deep ocean has been the subject of recent studies, with particular interest in the identification of trends, features and events in the data. Many early studies demonstrated the effect of shipping on low frequency sound dynamics in the deep-ocean. However, it is now recognised that there is a variety of other sound sources, both natural and man-made, contributing to the soundscapes. In addition, climatic variations like warmer oceans or sea ice cover can influence sound propagation over large distances. Given the high-dimensional and complex nature of the dataset, Gianluca is developing a modelling framework to reveal intersource and intersensor dependencies in the data, i.e. to extract quantitative information about sound levels in the deep ocean from the data. The analysis methodology developed includes aspects of signal processing, statistical characterisation of dynamics and machine learning techniques. Being able to separate and efficiently analyse sources of noise in this complex environment will lead to improved understanding of the local and global causes of fluctuations in ocean acoustics, and have potential impact in short- / long-term environmental planning and marine conservation. Gianluca is working in collaboration with the National Physical Laboratory (NPL).